Robo-Centric World Model

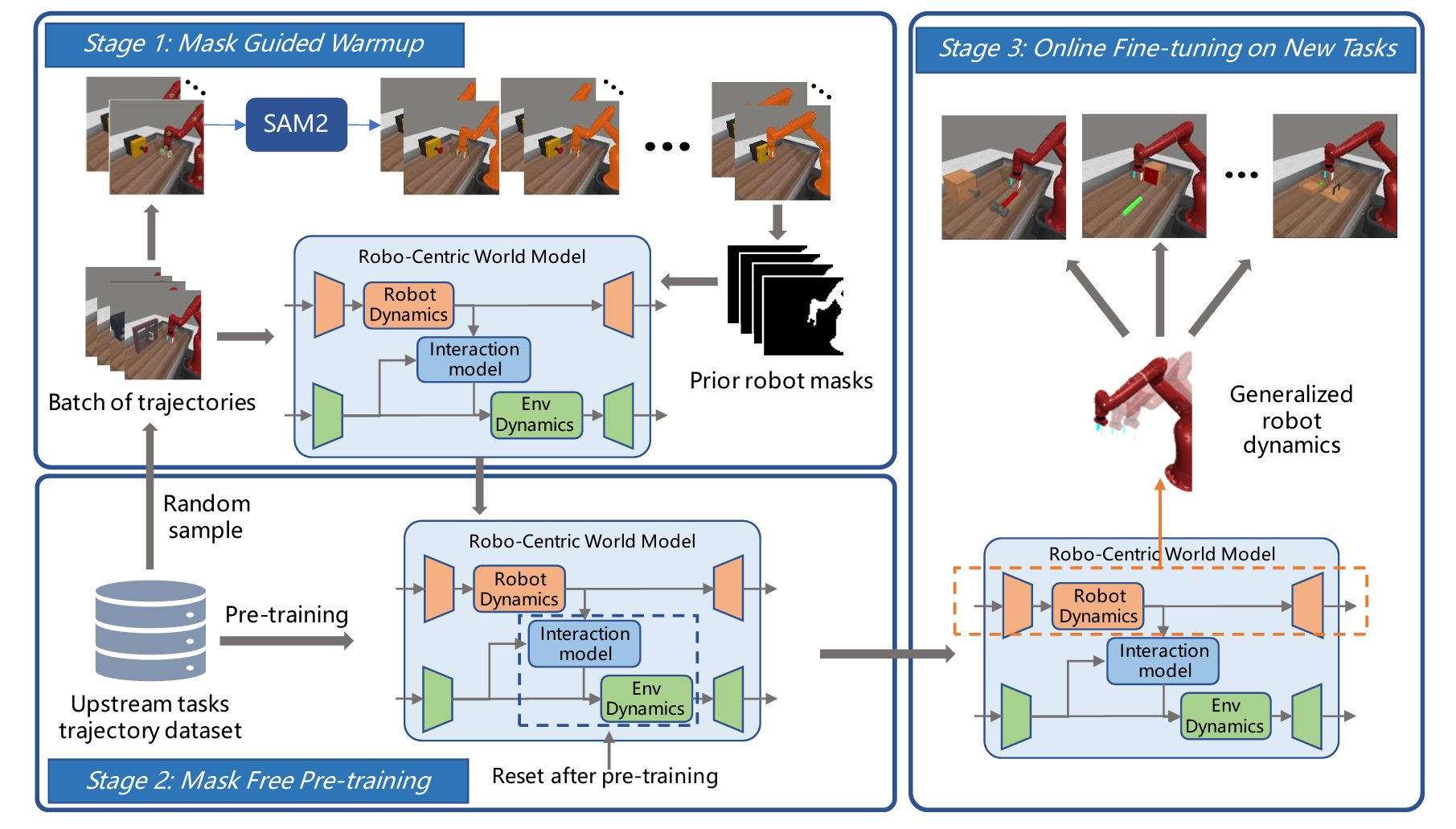

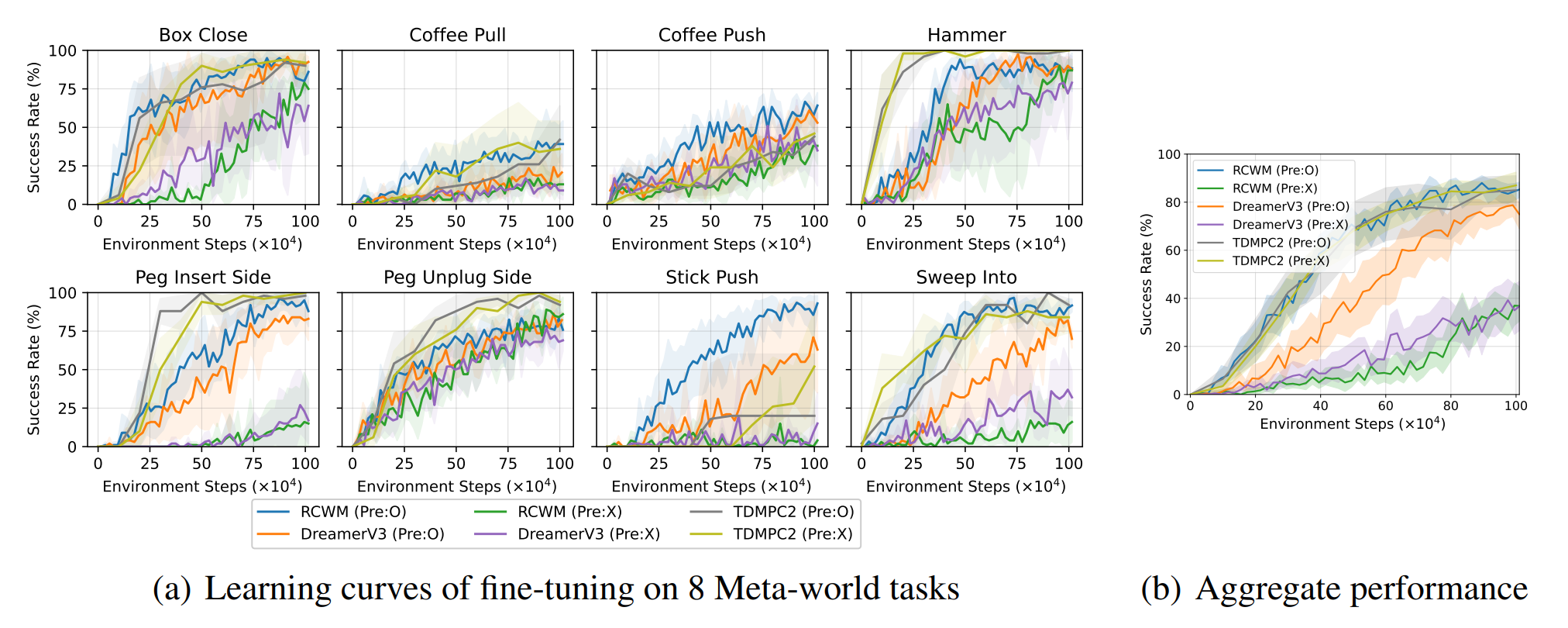

Compared to the vanilla world model in Dreamerv3 and TDMPC2, the pre-trained RCWM can utilize the extracted prior knowledge of robot dynamics to further improve the sample efficiency of downstream tasks.

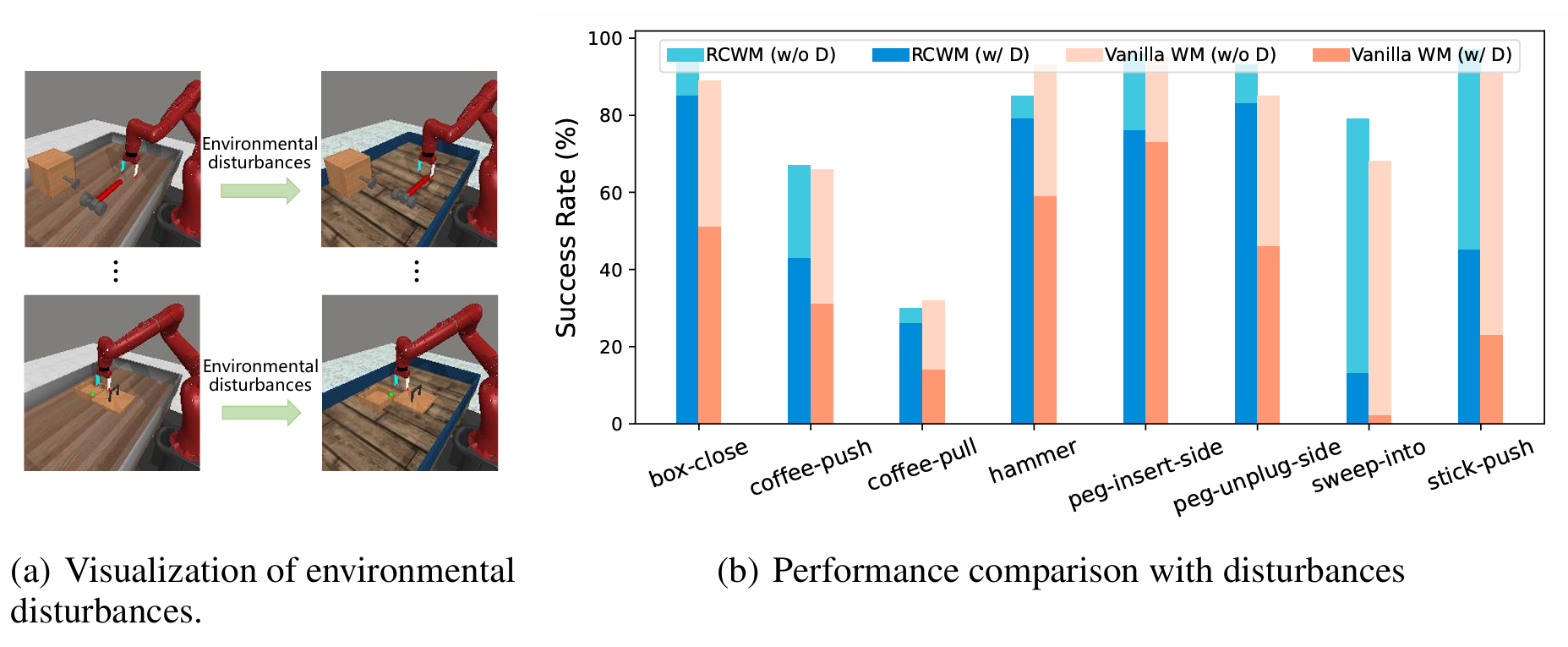

In the face of disturbances caused by changes in the environment, RCWM is more robust than vanilla world model and can provide accurate robot state representations for the policy.

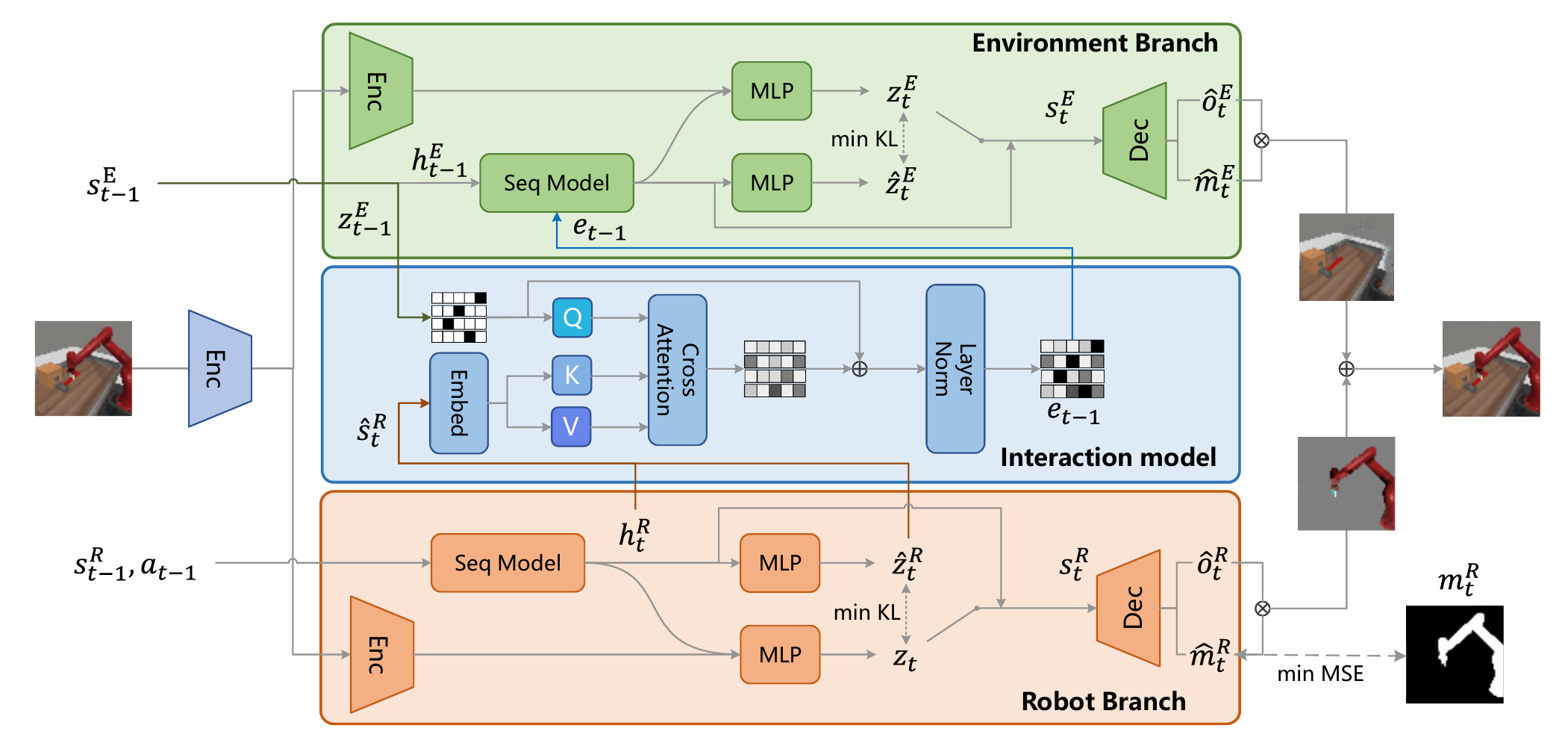

The pre-trained RCWM can consistently deliver accurate predictions of robot movements in response to actions when applied on unseen downstream tasks. Even when environmental observations are replaced with random noise, the robot branch can make accurate predictions almost unaffectedly.

The interaction model in RCWM can effectively utilize the cross-attention mechanism to capture how the predicted movement of the robot will effect the state of the environment. The environment branch can accurately generate predictions that align with the robot movement predicted by the robot branch.